Simple and fast CI/CD as a code for Kubernetes using Azure DevOps

In this shot, we will learn how to build a simple CI/CD pipeline for an existing .net core application and use Azure DevOps to move it to Azure Kubernetes Services (AKS). The final pipeline will be reusable and easy to understand.

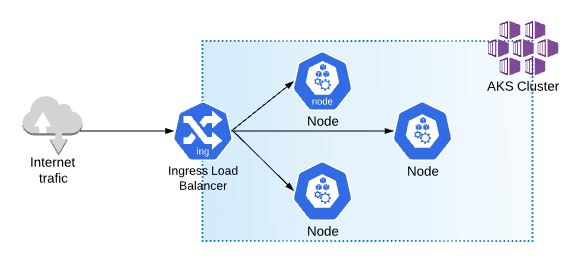

Deployment architecture

The deployment process includes fetching the code from the Git repository, building the application, restoring the NuGet packages, running the unit test, building the unit test and code coverage reports, pushing the docker image to the registry, and deploying it to the AKS cluster.

Azure Kubernetes Cluster setup

We will use a simple AKS cluster with a 3-nodes architecture that contains the Ingress-nginx load balancer. We can also use Traefik.io, istio.io, or even Standard Azure Load Balancer.

A detailed description of how we can set up the AKS cluster, including information on the Ingress setup and deployment scripts, can be found here.

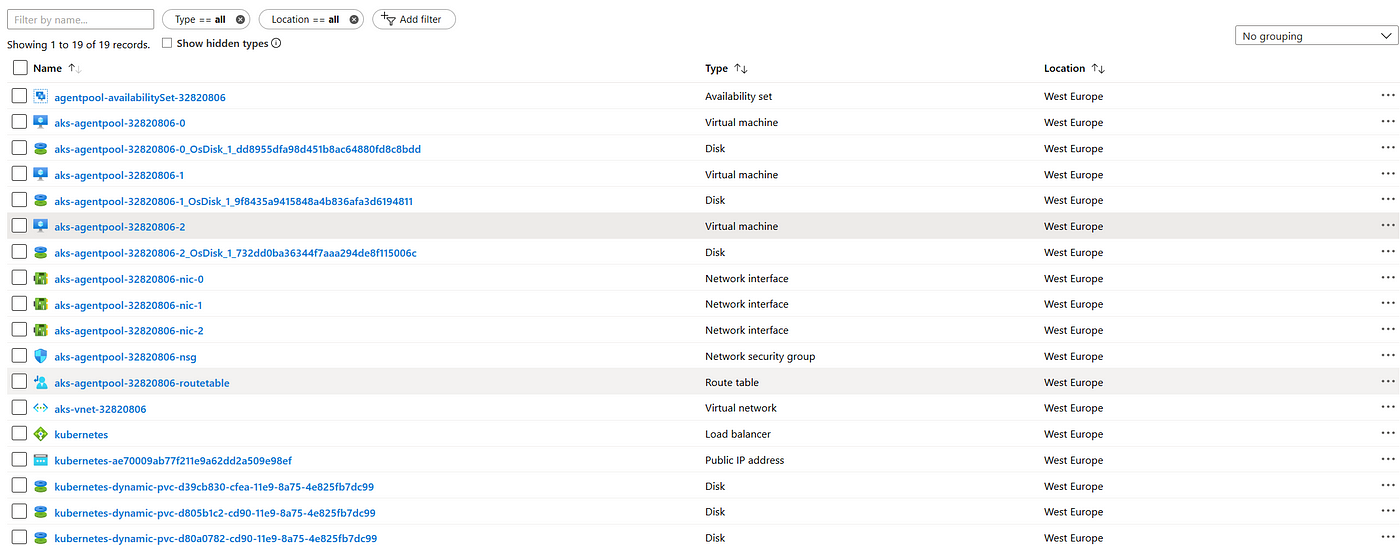

After deploying the AKS cluster, the main resource group will look like this:

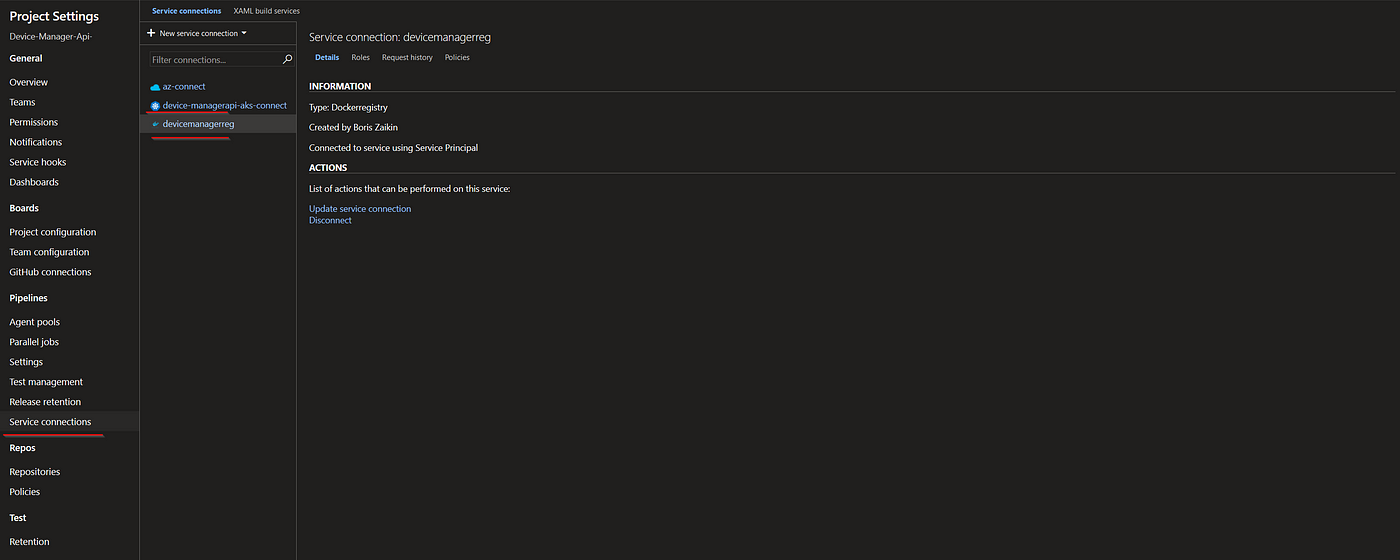

Set up service connections

Before we configure the main pipeline steps, we need to establish a connection between the Azure Container Registry (ACR) and the Azure Kubernetes Service by granting the AKS principal access to the ACR. After the RBAC service principal for Azure DevOps is created, everything is ready to push and pull the Docker images within the pipelines. Alternatively, we can also do this in the Azure DevOps service connection.

$AksResourceGroup = '<rg-name>'$AksClusterName = '<aks-cluster-name>'$AcrName = '<acr-name>'$AcrResourceGroup = '<acr-rg-name>'# Get the id of the AKS service principalClientID=$(az aks show --resource-group $AksResourceGroup --name $AksClusterName --query "servicePrincipalProfile.clientId" --output tsv)# Get the ACR registry resource idAcrId=$(az acr show --name $AcrName --resource-group $AcrResourceGroup --query "id" --output tsv)# Create role assignmentaz role assignment create --assignee $ClientID --role acrpull --scope $AcrId# Create a specific Service Principal for our Azure DevOps pipelines to be able to push and pull images and charts of our ACR$ registryPassword=$(az ad sp create-for-rbac -n $acr-push --scopes $AcrId --role acrpush --query password -o tsv)

Here is a detailed description of how we can configure the connection between ACR and AKS.

Azure DevOps service connection with AKS and ACR

By using a service connection, we can connect Azure DevOps to our pre-deployed AKS cluster, Azure Container Registry, Docker Registry (Docker Hub), and many other services.

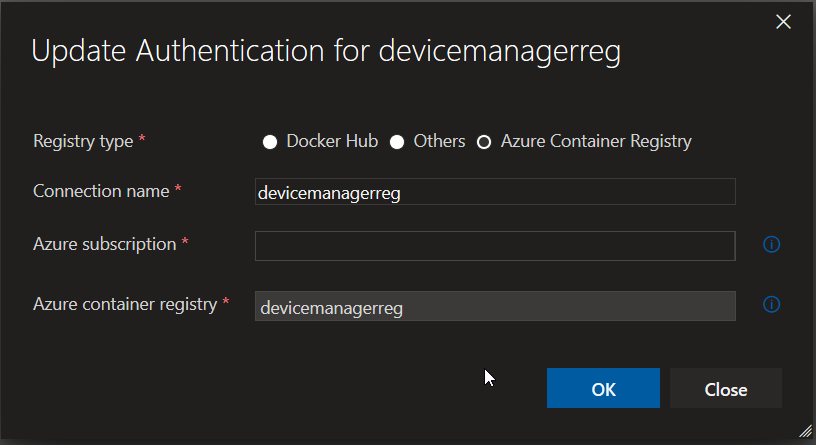

The creation of a connection to ACR is quite easy. We simply need to specify a connection name, a subscription, and a registry name.

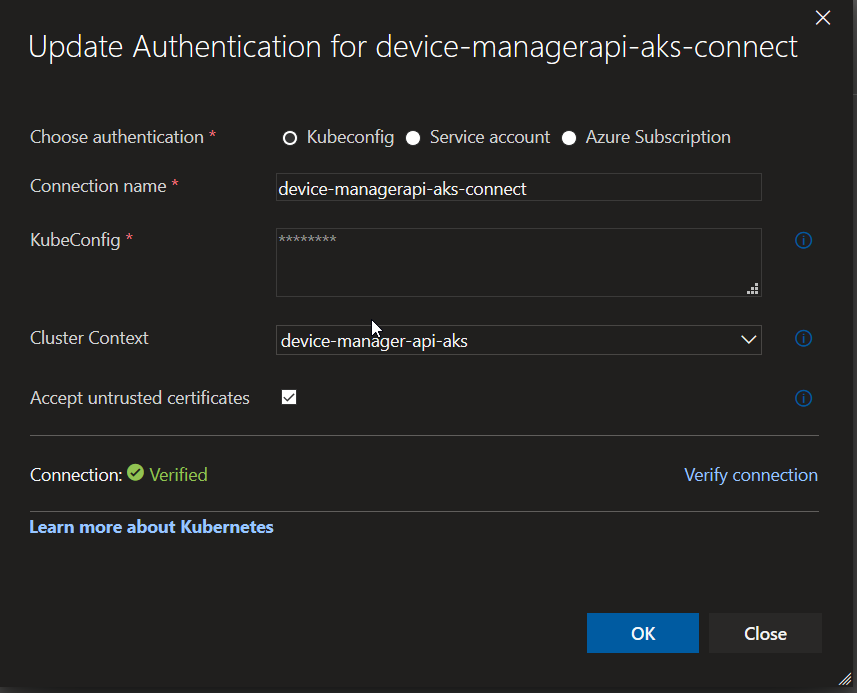

We can connect and authenticate our AKS cluster using Kubeconfig, a service account, and an Azure subscription. In my project, I use Kubeconfig as one of the fast options because you just need to find the kubeconfig JSON, copy it, and choose the cluster context.

We can find KubeConfig in the following directory in Windows: C:\Users\your_user_name\.kube\config. Here is the documentation on how we can find and work with KubeConfig in Linux and Mac.

When it comes to the process of creating the pipeline, the first step is to use a script for restoring all the NuGet dependencies/packages, building a .net core application, running unit tests, and building code coverage reports. We will also use the system variable $(Build.BuildNumber) as a tag for the coverage report generation. In the end, the test results will be published as artifacts that Azure DevOps can use to build visualization analytics charts.

- script: |dotnet restoredotnet build ./src/DeviceManager.Api/ --configuration $(buildConfiguration)dotnet test ./test/DeviceManager.Api.UnitTests/ --configuration $(buildConfiguration) --filter Category!=Integration --logger "trx;LogFileName=testresults.trx"dotnet test ./test/DeviceManager.Api.UnitTests/ --configuration $(buildConfiguration) --filter Category!=Integration /p:CollectCoverage=true /p:CoverletOutputFormat=cobertura /p:CoverletOutput=$(System.DefaultWorkingDirectory)/TestResults/Coverage/cd ./test/DeviceManager.Api.UnitTests/dotnet reportgenerator "-reports:$(System.DefaultWorkingDirectory)/TestResults/Coverage/coverage.cobertura.xml" "-targetdir:$(System.DefaultWorkingDirectory)/TestResults/Coverage/Reports" "-reportTypes:htmlInline" "-tag:$(Build.BuildNumber)"cd ../../dotnet publish ./src/DeviceManager.Api/ --configuration $(buildConfiguration) --output $BUILD_ARTIFACTSTAGINGDIRECTORY

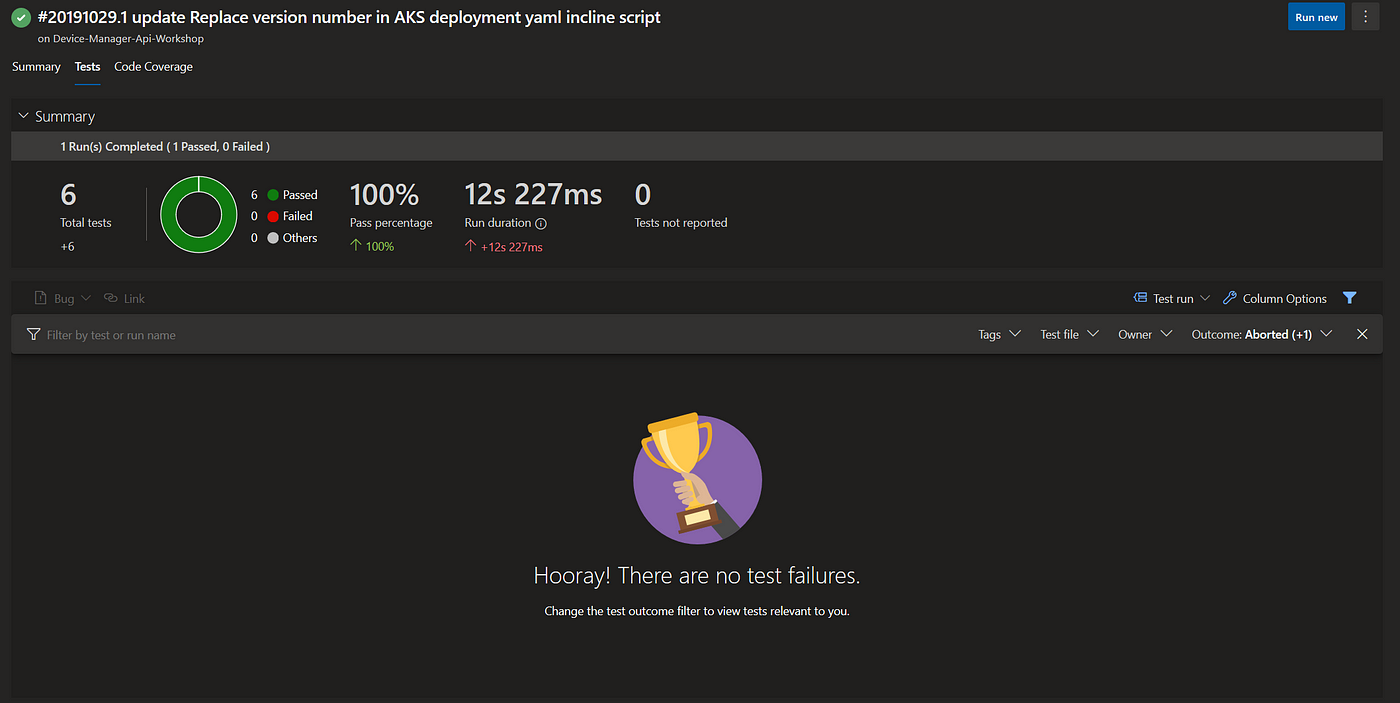

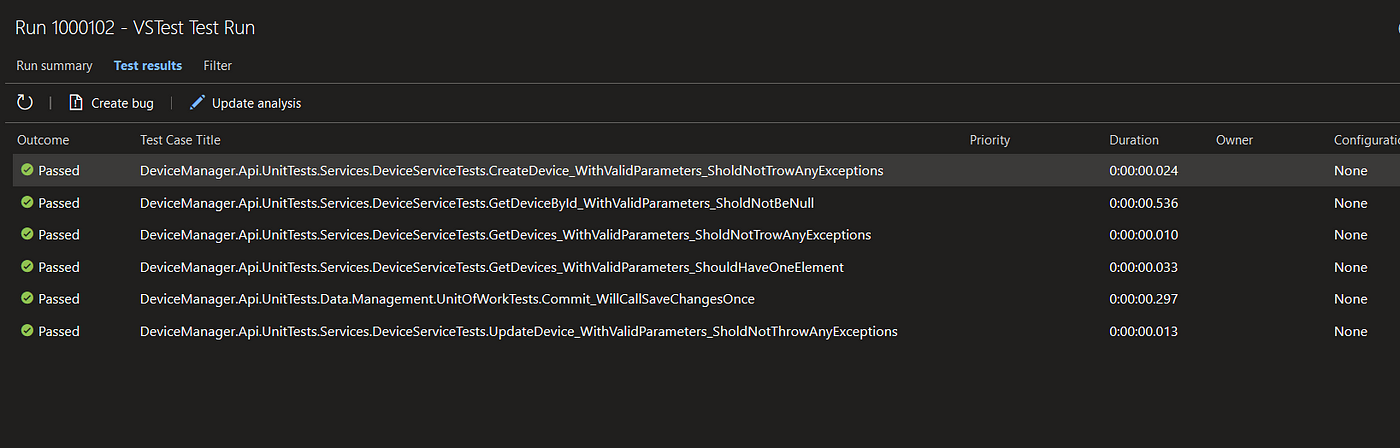

We can see the tests blade with statistic data and test results in the “Tests” section of the Azure DevOps.

Code coverage results

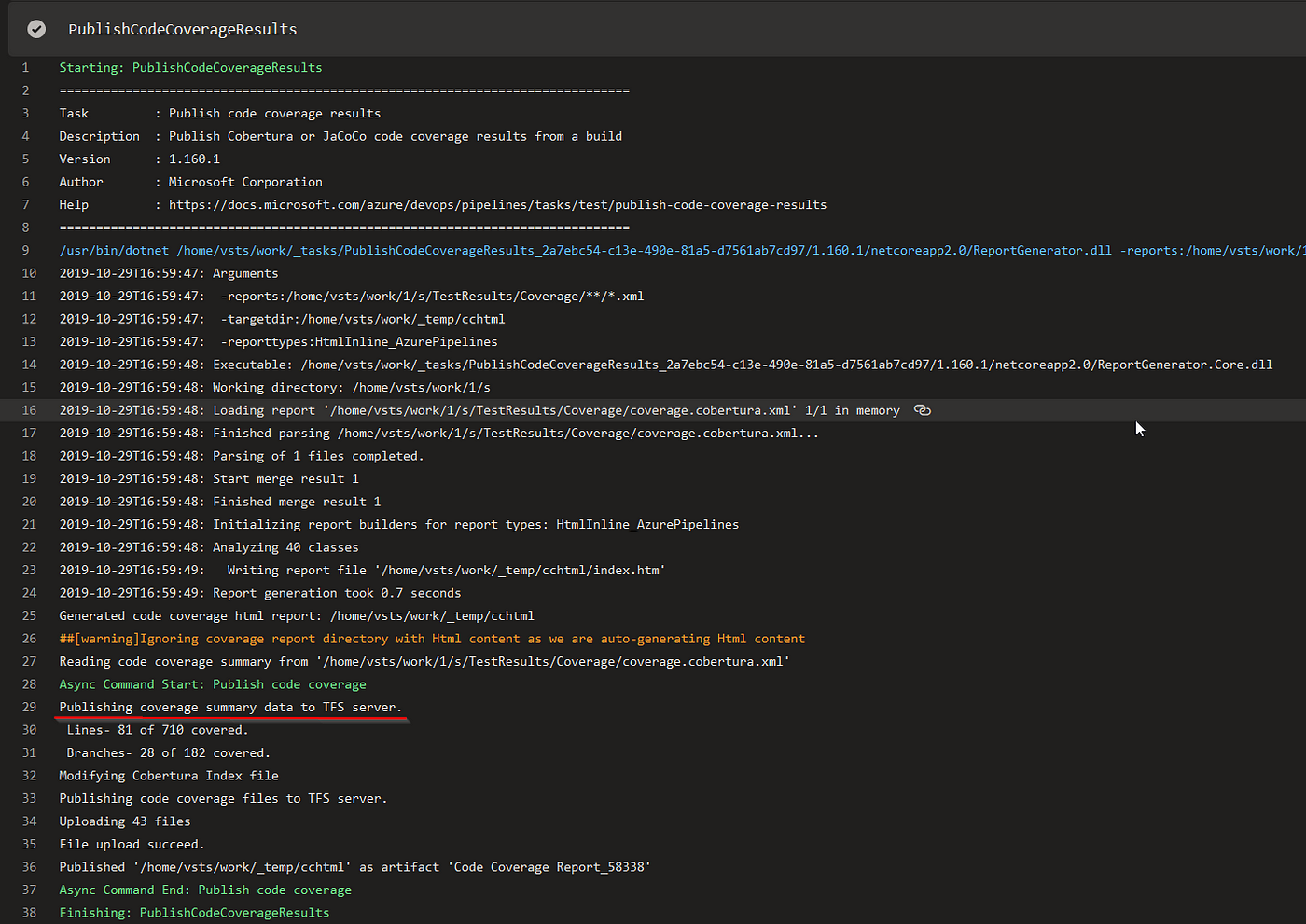

The next step in the process is to publish the code coverage results to the DefaultWorkingDirectory. The whole process is based on the Cubertura Report Generator, a .net core library.

- task: PublishCodeCoverageResults@1inputs:codeCoverageTool: coberturasummaryFileLocation: $(System.DefaultWorkingDirectory)/TestResults/Coverage/**/*.xmlreportDirectory: $(System.DefaultWorkingDirectory)/TestResults/Coverage/ReportsfailIfCoverageEmpty: false

The first statistic results are already available:

We can find the detailed file-by-file report in the “Code Coverage” tab. This report is based on generated XML reports.

Building the container and pushing it to the ACR

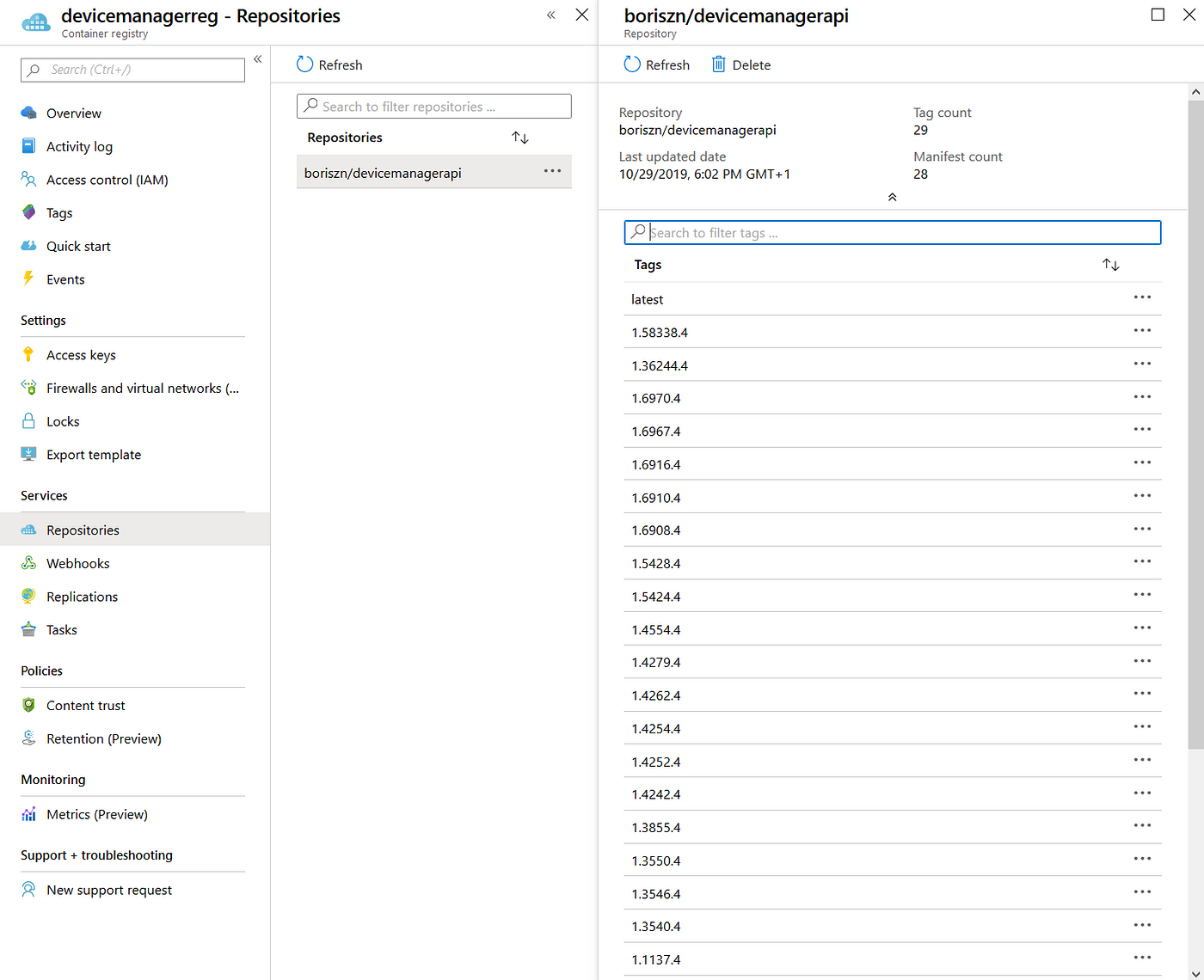

After the previous steps have been completed, the project is supposed to be put into a container. For this, we will use the version 1 Docker step. We need to specify a path to a Docker file, provide an image name (e.g. boriszn/devicemanagerapi), and tag — 1.102.1. The last step is to specify a container registry, e.g., devicemanagerreg.azurecr.io.

For tag creation, we will use semantic versioning and hard-coded version numbers to simplify the pipeline. However, we can also use different approaches to build a tag. For example, we can use variables that are required to run a pipeline or take them from the Git version.

- task: Docker@1displayName: 'Containerize the application'inputs:azureSubscriptionEndpoint: $(serviceConnection)azureContainerRegistry: $(containerRegistry)dockerFile: './src/DeviceManager.Api/Dockerfile'imageName: '$(fullImageName)'includeLatestTag: true

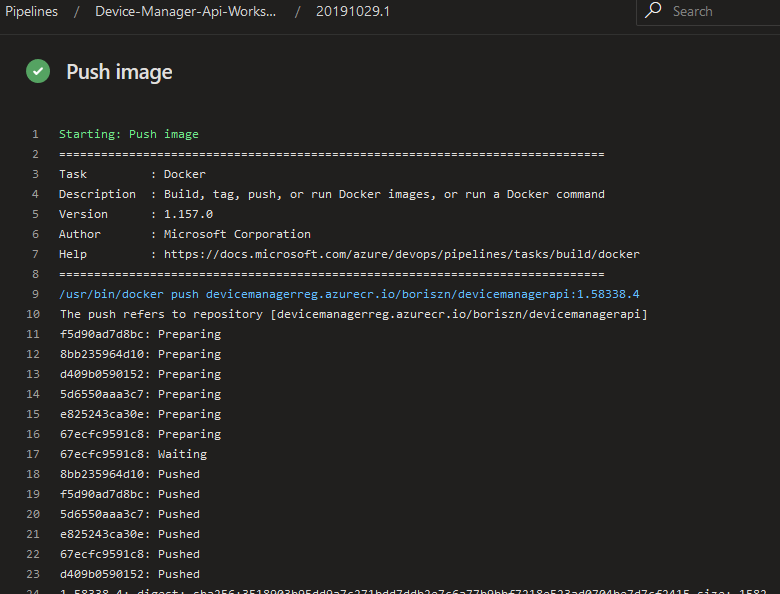

The next step is to push our Dockerized application to the Azure Container Registry. Here, we will specify a command and image to push. We should take into account the fact that we pushed 2 images, with 1.58338.4 as the first image tag and latest as the second tag.

- task: Docker@1displayName: 'Push image'inputs:azureSubscriptionEndpoint: $(serviceConnection)azureContainerRegistry: $(containerRegistry)command: 'Push an image'imageName: '$(fullImageName)'- task: Docker@1displayName: 'Push latest image'inputs:azureSubscriptionEndpoint: $(serviceConnection)azureContainerRegistry: $(containerRegistry)command: 'Push an image'imageName: '$(imageName):latest'

AKS deployment steps

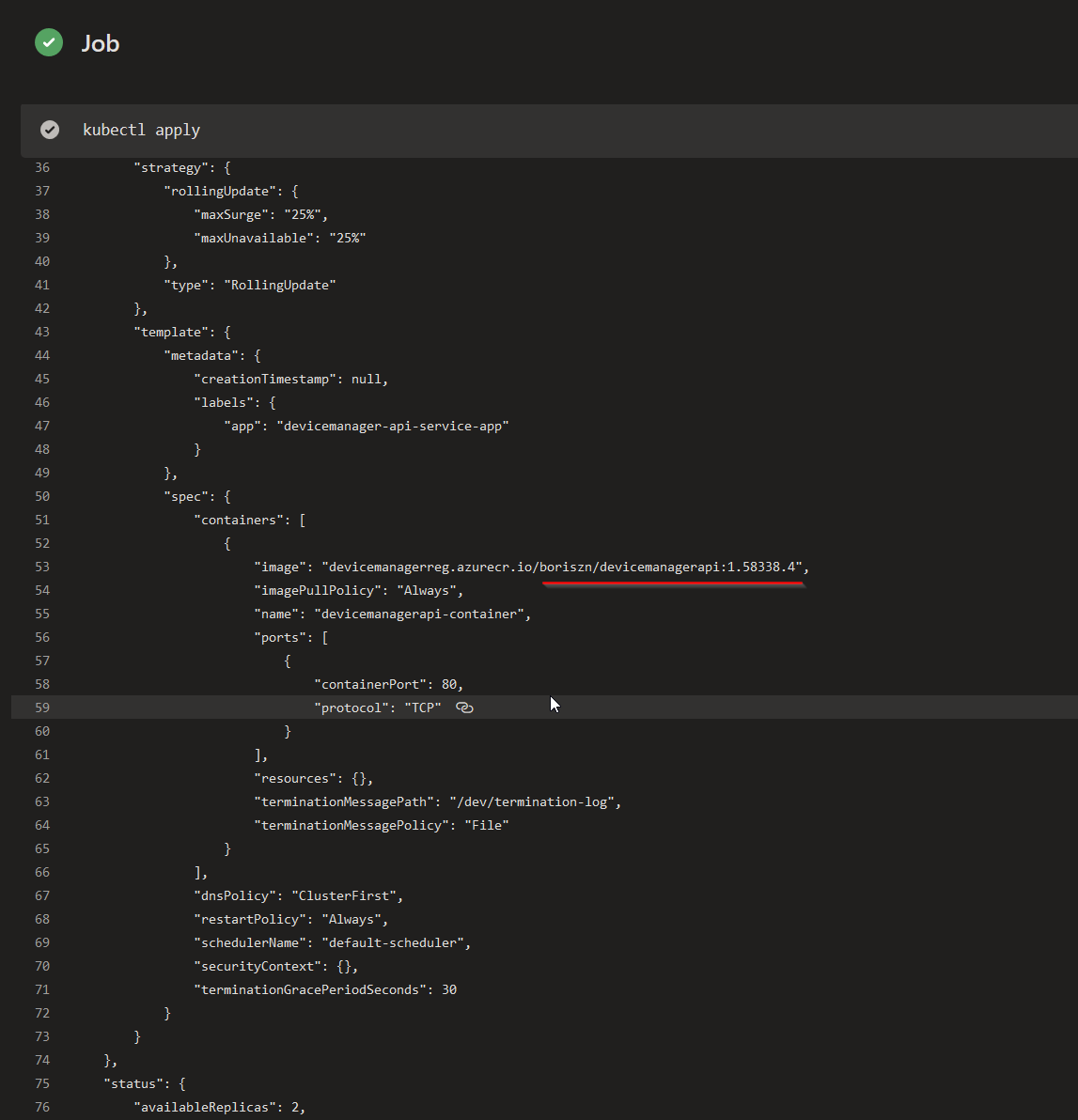

For the Azure Kubernetes Deployment, we need to replace a build number in the AKS deployment YAML file and display it in Azure DevOps after successful execution.

- task: PowerShell@2displayName: 'Replace version number in AKS deployment yaml'inputs:targetType: inlinescript: |# Replace image tag in aks YAML((Get-Content -path $(aksKubeDeploymentYaml) -Raw) -replace '##BUILD_ID##','$(imageTag)') |Set-Content -Path $(aksKubeDeploymentYaml)# Get contentGet-Content -path $(aksKubeDeploymentYaml)

After that, we will run the “Apply” command for the AKS cluster.

Two important parameters here are a path to our cluster deployment YAML script (./deployment/aks-deployment.yaml) and a cluster name (device-manager-api-aks). Other parameters are explained in the previous sections of this shot.

- task: Kubernetes@1displayName: 'kubectl apply'inputs:kubernetesServiceEndpoint: $(kubernetesServiceEndpoint)azureSubscriptionEndpoint: $(serviceConnection)azureResourceGroup: $(azureResourceGroupName)kubernetesCluster: $(aksClusterName)arguments: '-f $(aksKubeDeploymentYaml)'command: 'apply'

That’s it! The complete YAML pipeline that we configured in the previous few sections is listed below. This pipeline is easy to import to our Azure DevOps projects.

trigger:- developpool:vmImage: 'ubuntu-latest'variables:imageName: 'boriszn/devicemanagerapi'buildConfiguration: 'Release'fullImageName: '$(imageName):$(imageTag)'containerRegistry: devicemanagerreg.azurecr.ioimageTag: '1.$(build.buildId).4'serviceConnection: 'az-connect'azureResourceGroupName: 'boriszn-rg-aks-devicemanager-api-we'aksClusterName: 'device-manager-api-aks'aksKubeDeploymentYaml: './deployment/aks-deployment.yaml'kubernetesServiceEndpoint: 'device-managerapi-aks-connect'steps:- script: |dotnet restoredotnet build ./src/DeviceManager.Api/ --configuration $(buildConfiguration)dotnet test ./test/DeviceManager.Api.UnitTests/ --configuration $(buildConfiguration) --filter Category!=Integration --logger "trx;LogFileName=testresults.trx"dotnet test ./test/DeviceManager.Api.UnitTests/ --configuration $(buildConfiguration) --filter Category!=Integration /p:CollectCoverage=true /p:CoverletOutputFormat=cobertura /p:CoverletOutput=$(System.DefaultWorkingDirectory)/TestResults/Coverage/cd ./test/DeviceManager.Api.UnitTests/dotnet reportgenerator "-reports:$(System.DefaultWorkingDirectory)/TestResults/Coverage/coverage.cobertura.xml" "-targetdir:$(System.DefaultWorkingDirectory)/TestResults/Coverage/Reports" "-reportTypes:htmlInline" "-tag:$(Build.BuildNumber)"cd ../../dotnet publish ./src/DeviceManager.Api/ --configuration $(buildConfiguration) --output $BUILD_ARTIFACTSTAGINGDIRECTORY- task: PublishTestResults@2inputs:testRunner: VSTesttestResultsFiles: '**/*.trx'- task: PublishCodeCoverageResults@1inputs:codeCoverageTool: coberturasummaryFileLocation: $(System.DefaultWorkingDirectory)/TestResults/Coverage/**/*.xmlreportDirectory: $(System.DefaultWorkingDirectory)/TestResults/Coverage/ReportsfailIfCoverageEmpty: false- task: PublishBuildArtifacts@1- task: Docker@1displayName: 'Containerize the application'inputs:azureSubscriptionEndpoint: $(serviceConnection)azureContainerRegistry: $(containerRegistry)dockerFile: './src/DeviceManager.Api/Dockerfile'imageName: '$(fullImageName)'includeLatestTag: true- task: Docker@1displayName: 'Push image'inputs:azureSubscriptionEndpoint: $(serviceConnection)azureContainerRegistry: $(containerRegistry)command: 'Push an image'imageName: '$(fullImageName)'- task: Docker@1displayName: 'Push latest image'inputs:azureSubscriptionEndpoint: $(serviceConnection)azureContainerRegistry: $(containerRegistry)command: 'Push an image'imageName: '$(imageName):latest'- task: PowerShell@2displayName: 'Replace version number in AKS deployment yaml'inputs:targetType: inlinescript: |# Replace image tag in aks YAML((Get-Content -path $(aksKubeDeploymentYaml) -Raw) -replace '##BUILD_ID##','$(imageTag)') |Set-Content -Path $(aksKubeDeploymentYaml)# Get contentGet-Content -path $(aksKubeDeploymentYaml)- task: Kubernetes@1displayName: 'kubectl apply'inputs:kubernetesServiceEndpoint: $(kubernetesServiceEndpoint)azureSubscriptionEndpoint: $(serviceConnection)azureResourceGroup: $(azureResourceGroupName)kubernetesCluster: $(aksClusterName)arguments: '-f $(aksKubeDeploymentYaml)'

- undefined by undefined